5 Simple Steps to Save Money by Optimizing Your Snowflake Data Architecture

This is the transcript of a video recording. Access the recording in the original post published on Aptitive’s blog.

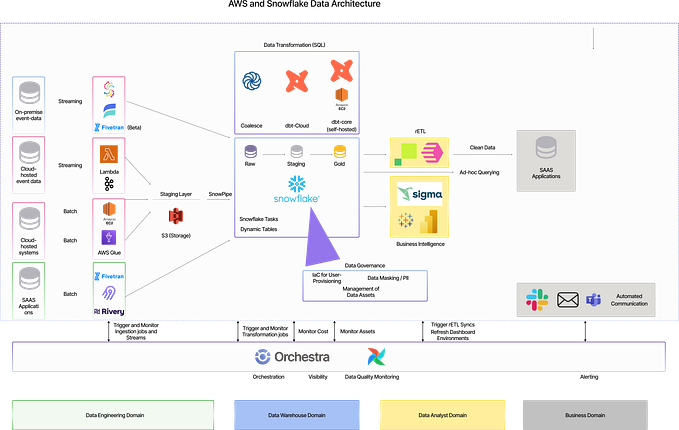

Many organizations are upgrading to Snowflake’s modern data platform from their traditional on-prem data warehouses. While many of the general concepts remain the same, there are a few key differences that can lead you to overspend on your Snowflake implementation. In this video, we’ll cover what these differences are and share tips for optimizing your Snowflake data architecture and reducing costs.

Transcript

This is Greg Marsh with Aptitive and I’m here to discuss five money saving tips for Snowflake. So you’ve purchased Snowflake and you’re spending too much money. This is partially because Snowflake is a modern technology, and must be treated differently than the on-prem systems that you’re used to. In order to understand how to save money on Snowflake, I first wanted to discuss the biggest differences between Snowflake and traditional architectures.

On-prem environments combine the storage and compute into one central location, resulting in the hardware constantly queueing transactions and updating storage. Alternatively, Snowflake separates the storage and compute by constantly making copies of the underlying data into virtual machines, allowing for parallel compute tasks and then only inserting timestamp changes to tables as new immutable data partitions. So in short, to save money on a traditional setup, you tend to focus on maximizing storage. In Snowflake as you will find during this video, the goal is to generally optimize compute.

So onto the money saving tips. The first tip is to load data in the order that you want to use it. So for on-prem you provide partitioning and table constraints to optimize storage before you load, meaning you put in the development work upfront to make sure the data is stored correctly on disk. For example, you might create a file group per fact in your data warehouse, or define a partition per year so it’s easier for the query optimizer to zero in on the correct data set to use. For Snowflake there is no explicit partitioning or constraints. In fact, constraints are not enforced even if you do define them. Data is split into micro partitions automatically as you load. So to save money, the goal is to limit the number of micro partitions that any one query would need to use once the data is loaded. To that end, try not to load your data as a heap. Simply load in a natural ingestion order that you would generally use for your query. So like time or company or line of business, and Snowflake will automatically cluster that type of data together. By creating the partitions together, the query optimizer will be able to prune the number of micro partitions it will need to copy into the virtual machine to create the good results.

The next step may seem obvious, but is key to working in the cloud. It’s to dynamically resize your data warehouse based on your workload. This is the classic example of why you move to the cloud in the first place. For on-prem, you had to size for the worst case scenario, you had to consider the amount of disk, RAM, and compute you needed to handle the maximum amount of concurrent workflows at any one time. However, Snowflake has the advantage of managing the scale virtual machines automatically. Because it’s painless to spin up new virtual warehouses, add clusters, or expand the amount of available memory. You can effectively balance performance and expense. So for example, during your nightly ETL jobs, turn on a large VM, run the complex batch workloads, and then immediately turn them off so you’re not spending any more money. Alternatively, for your report application in the morning, have a small machine run with four clusters when the whole company is trying to update their dashboards in parallel, and then reduced down to one once there are less people pinging the application concurrently. Snowflake is designed to dynamically react to your compute demands, so you should take advantage of it.

Tip three is to model your data. As we discussed, because you’re not spending time to partition or manage the data warehouses hardware, your analytics team can focus on the data engineering aspect of your solution. The biggest issue I have seen with Snowflake deployments is clients pulling in their third normal form transactional data and trying to build reporting directly on top of those tables. They tend to struggle and create the proverbial spaghetti mess, so they move to build a table per report, often seen as views. I would not recommend this approach for Snowflake. Snowflake was designed to act as a data warehouse and that means creating a semantic layer of data that organizes and integrates the information together. In the long term, this will save you money by dramatically simplifying the connection between your reporting applications in Snowflake, and improve the efficiency of the queries that render your reports. You’ll no more have any of those 10,000 line queries for that big end of the month financial report. You know what I’m talking about.

Now, I don’t mean to sound condescending with this next tip, but I have found that working with Snowflake, one of the biggest avoidable expenses, is compensating for complex SQL code with the ability to scale your compute. So in the on-prem world, developers would add all sorts of non clustered indexes to optimize their queries but in Snowflake the tendency is to simply scale up or out on the data warehouse to throw money at the problem. Although it is true that the performance will improve if you double the size of the warehouse, so will the price. So the best practice is to review your SQL to make sure you’re avoiding those expensive commands like distinct or group by order by, especially if they’re unnecessary, and write your queries to minimize the number of micro partitions used to generate the results at.

The final money saving tip to use on Snowflake is to utilize the cache. When on-prem to get more performance, you have to increase the ram or CPU of the underlying machine or groom the execution plan to be more efficient. However with Snowflake, you can boost your short term performance without a bunch of extra spin simply by utilizing the cache effectively. Now, I’m not talking about increasing the size of the warehouse like I mentioned before, but rather about using the three levels of cache that are native to a Snowflake deployment. So starting from the end, the result cache is saved for 24 hours in the Snowflake metadata repository, meaning that for a full day after the most recent run of a query, if nothing about your syntax or the underlying data has changed, Snowflake will return the results set for free in a fraction of a second. This is much more efficient than on-prem. So next, the virtual warehouse cache represents the micro partitions that were already copied to that VM for other earlier queries. So for example, if your query required all the micro partitions from 2020 and then a second query required a subset of those partitions like just May of 2020, Snowflake will reuse the partitions that are already in the data warehouse, therefore skipping the step of needing to copy it from the central storage. This is called warming the cache. So you may intentionally load a warehouse with data to service reports in the morning and leave that warehouse on during the day to increase performance without having to spend more money. Finally, the metadata cache will remember the statistical information on micro partitions that were used to build a query execution plan. Actually, we have no direct control over this cache, but it is useful for improving common queries, even if you allow virtual warehouses to suspend. So by warming the cache and using duplicate queries across reports and limiting the speed of change of the underlying data, Snowflake will allow greater performance for little to no additional money.

So do you want to know more? Aptitive is a top tier Snowflake partner located in Chicago with expertise in everything data, including solution architecture, dashboarding, and advanced analytics. For more resources and content visit our Snowflake page or contact us to discuss other tips for improving Snowflake deployment.

Originally published at https://aptitive.com on June 2, 2020.